This article will teach you what are multithreads, multicores, and in what circumstances each can be used.

Your nerd friend keeps telling you about his professional deformation all the time? Wanting to parallelize and optimize his time? Do you wish to understand it as well and save time by parallelizing your programs in Python? Then this article is what you need!

You will be able to gain big amounts of time, thanks to a small dose of parallelism and Python and lots of love. The goal here is to shorten coffee breaks and stop being able to watch Star Wars Episode IV directly on the terminal :

telnet towel.blinkenlights.nlYou will learn here to watch Star Wars and code while drinking your coffee 😉

You’ll need first a bit of vocabulary. If you know what the difference is between a multithread and a multicore you can skip to the Python section, and if you know what Python is you can go directly to the ‘Module threading’ part. If you already know how to code in Python and you have a complete mastery of the concept of parallelism, you can either : stop reading the article here and save 38 minutes (time estimated based on a not-so-serious study) or : read this article and have a great time doing so.

-

MultiCore : Are we talking about octopuses here ? (Please translate to french to understand the joke, it’s actually kinda funny)

The word core or as we would say it in French “coeur”, also literally translating to heart, is not so well chosen, we prefer the analogy with the brain (and yes, the octopus also has multiple brains) – bear with us please, we originally wrote the article in French. Your computer can use at the same time (the term is in parallel) multiple programs or applications (mail, web browser, …). Now imagine if you had multiple brains, then you could be able to read this article and do an E.coli transformation at the same time.

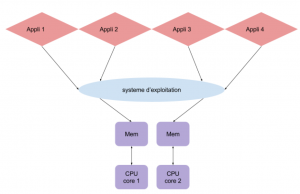

The operating system, which can be seen as a scheduler, runs the different applications by dividing them among the different cores (CPUs). The computer can them split the work between each core and do a couple of things at the same time. The system depicted in the following image has 2 cores, so this computer can use the applications 1 and 2 at the same time, for example being on the internet and on the terminal simultaneously.

-

MultiThread : Are we going to talk about sewing ?

Multithread allows your program to perform multiple tasks at the same time.

In a monothread app, a task prevents other tasks from running while it is not done!!

Conversely, a multithread app optimizes the efficiency of the central unit because it stays active and executes in parallel.

Here, the application contains 3 tasks that will be run in parallel and so advancing at the same time. For one same use of the CPU, we will be able to start 3 tasks concurrently.

-

Python reminder : Invertebrates are my specialty

“The term Python is an ambiguous vernacular word refering to a wide variety of species of snakes belonging to the Pythonidae and Loxocemidae families. Pythonest is the scientific name of a kind of snake in the Pythonidae family.” — Wikipedia French Version.

Python is also a programming language that allows us to write cool programs that can be parallelized. We are talking here about programs, not scripts. If you wish to learn Python, we recommend : this awesome book (note that the book is in French ; if you can’t read French, then Google is your best friend).

Ok, you’re finally ready for the adventure!!

Let’s start by showing you the main differences between mutilprocessing and multithreading within the same module “concurrent.futures” that allows us to parallelize using threads as well as processes.

from concurrent import futuresThe following code is taken from this site :

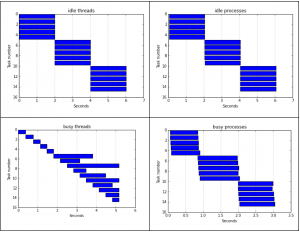

We modified it and commented it a little bit to make it more understandable. The following images are the original ones used by the author.

The author specifies 5 workers, meaning 5 threads/processes that run at the same time for 15 iterations

WORKERS = 5

ITERATIONS = 15

Here is the function that was used :

def test_multithreading(executor, function):

start_time = datetime.datetime.now()

with executor(max_workers=WORKERS) as ex:

result = list(ex.map(function, itertools.repeat(start_time, iterations)))

start, stop = np.array(result).T

return start, stop

For a better comprehension, here is a second version (less pretty) of the function. It does the same thing, but using “for” loops :

def test_multithreading(executor, function):

start_time = [datetime.datetime.now() for _ in range(iterations)]

with executor(max_workers=WORKERS) as ex:

processes = []

for st_time in start_time:

processes.append(ex.submit(function, st_time))

result = []

for p in processes:

result.append(p.result())

start, stop = np.array(result).T

return start, stop

Example of a call to the function :

test_multithreading(futures.ThreadPoolExecutor, busy)“busy” is a function that we define below.

Idle : The program does a 2 seconds pauses

Busy : The program performs a (relatively) long calculation

Results : For the “idle” function, the execution time of the 5 threads vs processes is pretty much the same, except for a small delay in the start-up of the process.

However, we notice that when the operation is costly in memory consumption, the threads are far less performant than processes.

For more information about the origin of this code and these images, go to this site.

Seeing as we’re very nice people, we constructed a small summary table for you :

| Threads | Processes |

|---|---|

| Creating threads is fast | Creating processes is slower |

| Threads use the same memory :

→ Communication is fast → Less memory is used |

Processes use separated memory spaces.

→ Communication is slow and difficult → A bigger portion of the memory is used |

| Run on a single processor | Take advantage of the differents CPUs and cores of the machine |

| The Global Interpreter Lock (GIL) prevent the simultaneous execution of the threads | The multiprocess are independants allowing them to overpass the GIL |

| Very good communication method! (example: a server asking a running script for inputs) | Time-efficient since it takes advantages of all the capacity of the machine |

| The ‘threading’ module is a very powerful tool containing a high number of functionalities | The ‘multiprocessing’ module is designed to be as close as possible to the ‘threading’ module |

| If a thread crashes, all the others will also crash | If a process crashes, it will not affect the others since they are independent |

Table showing the major differences between multiprocess and multithread in Python.

All this is very cool, but in practice we have to know how to use the different modules that can help us take advantage of this extraordinary invention that is parallelization!

To our knowledge, three “pythonic” modules currently exist capable of performing this job :

- The multiprocessing module

We recommend that you go check out this excellent article on this subject :

“Put Those CPUs to Good Use !” - The threading module

This module is an excellent tool for parallelizing threads in python. It is highly influenced by Java, and provides a real arsenal of tools for experimented programmers. However, the richness of this module makes it a bit complex, and relatively long to master. For those of you who don’t wish to learn the threading module, there exists a sub-library, multiprocessing.dummy, that allows us to spawn threads. This library is a clone of multiprocessing, thus granting the use of the ‘map’ function (for examples on how to use ‘map’, refer to the article cited in the previous section).

Here is an example of a call to this function :from multiprocessing.dummy import Pool as ThreadPoolNote : We have included this‘multiprocessing’ library in this section even though it is not part of the “threading” module, but part of “multiprocessing”. We have made the choice to include it here because ‘multiprocssing.dummy’ allows the creation of threads.

- The concurrent.futures module

This module is relatively recent, it is a simplification of the 2 previous modules. But that’s not all, for every call of a function, a “futur” object is created, allowing the user to follow the evolution of the process (or thread) and offers wider spectrum of actions that can be applied to it. Obviously, this causes the method to be slower than the ones we described above.

If you need to understand a library in Python, it should be this one.

Thank you and congratulations for reading this article until the end. We hope to have conquered you in our first article. Don’t hesitate to send us any question or comment that you might have: the more feedback we have, the better quality our articles will be!

Leave A Comment